A century has passed since the birth of quantum theory in 1925, when matrix mechanics emerged from Werner Heisenberg’s retreat on Helgoland. Yet, one of the enduring challenges in popularizing quantum mechanics lies in the fact that the theory does not concern itself exclusively with the esoteric workings of nature, but also — and, I believe, more importantly — with how these peculiar principles intertwine with our everyday experience.

Within the community of quantum physicists and researchers, the ways in which these ideas are explained do not always achieve universal acceptance. Unfortunately, the need to formalize these narratives has often exceeded their scope, leading to a proliferation of different interpretations. These interpretations are typically presented as attempts to bridge the gap between puzzling theoretical concepts and observable phenomena. Philosophical tradition recognizes the inherently problematic nature of any clear-cut separation between phenomenology and a ‘reality’ stripped of observational contingencies, but physicists often prefer to overlook these insights. Instead, they repeatedly stumble into fruitless discussions about incorporating the explanation of the so-called measurement problem into the current theoretical framework.

We at NeverLocal strongly believe that cryptography is a field poised to be drastically reshaped by quantum technologies, yet its significance extends far beyond this transformation. It also serves as a lens to clarify some of the most intriguing and confusing aspects of quantum mechanics. By operationalizing the abstract symbolic devices used to describe quantum experiments, cryptography can demystify the core principles of the theory in an accessible way — reducing reliance on the mathematical complexity of formalisms such as Hilbert spaces and complex-linear operators, mere tools to synthesize the deeper essence of natural regularities.

Cryptography’s task is to study these regularities, scrutinizing the assumptions and structures that underpin the security of protocols. In this blog post, we will explore the conceptual motivations driving the rich interplay between cryptography and quantum theory, tracing the profound impact quantum mechanics has had on cryptographic advancements in recent decades.

From Secrecy to Security

Suppose that Alice wants to send a message to Bob secretly. One possibility — though lacking any particular cryptographic sophistication — would be for Alice to establish a confidential, authenticated channel, by carrying a locked suitcase and ensuring that it is only opened in her presence. While this method of establishing a secure connection is effective, the scenarios we will explore in this blog post are different. Specifically, we will ask: Is it possible to transmit private messages over a public channel, one that can be freely accessed by potential eavesdroppers?

The benefits of such an endeavor are clear. One must ensure that the potentially public channel is authenticated, so that the message can be verified as coming from a trusted party, even if secrecy is not guaranteed. There are several techniques to achieve this. One of the most reliable methods for ensuring unconditional secrecy is the one-time pad. This method uses a random secret key — a string of bits as long as the message Alice wants to send to Bob. The key is shared by both parties, and Alice uses modulo-two arithmetic (bitwise XOR) to scramble the message. For example, suppose that Alice wants to send the message $(0,1,0,1,1)$ and that she and Bob share the secret key $(1,1,1,0,1)$: The XORed message $(1,0,1,0,0)$ would appear completely random to anyone intercepting it from the public channel without access to the shared key.

Under the assumption that the two agents have a source of shared randomness of appropriate length, the public channel between them can be used to perfectly secure the transmission of a secret. Here, we arrive at an essential point: Cryptographic discussions, much like discussions of non-locality and contextuality in quantum theory, can never be made absolute. There is no such thing as absolute security, just as there is no such thing as non-locality without the appropriate assumptions — the contexts that make these notions well-defined in the first place.

The Limits of Perfect Secrecy

The pioneer of a mathematical framework for characterizing the phenomenology of secrecy is also the father of information theory: Claude Shannon. In his work Communication Theory of Secrecy Systems, Shannon introduced the notion of perfect secrecy, defined as the scenario in which, after intercepting the cryptogram — the encrypted message — a malicious third party can only assign a posteriori probabilities to the message that are equal to their a priori knowledge of it. Shannon demonstrated that perfect secrecy is achievable, but it requires the complexity of the secret key shared between the parties to match the complexity of the message itself. This implies that perfect secrecy is inherently impractical, as the entropy of the message distribution $H(M)$ must be at least as large as the entropy of the keys $H(K)$.

The more simplistic interpretation of this fact is often stated as ‘the secret key needs to be at least as long as the message.’ However, a more precise description of this intrinsic limit to security is somewhat harder to articulate. The quantity $H(M)$, now universally known as Shannon entropy, can be understood as describing the amount of information produced when a specific message is chosen:

\[H(M) = -\sum P(M) \log P(M)\]If there are $n$ equiprobable messages in the message space, the probability of any one occurring is $1/n$, and $H(M) = \log n$. Calculating these entropies is not always straightforward. In his subsequent paper Prediction and Entropy in Printed English, Shannon analyzed entropy using word-frequency distributions and contextual dependencies. For trigrams (three-word sequences), he estimated the entropy to be approximately 6 bits per word. The estimation of entropy depends on the contextual information about the type of message being shared between the parties, and these analyses are performed from the perspective of the attacker. Any such scheme is therefore inherently impractical; the requirement to share secret keys of similar complexity to the message itself trivializes the task, as most applications only allow for relatively short secret keys.

However, practical cryptography is possible, and public-key cryptography mechanisms such as RSA enable us to share secrets using reasonably sized keys. Doesn’t this contradict Shannon’s findings? Here, we return to one of the key points emphasized at the beginning of this blog post: The notion of security is always context-dependent, in the sense that it relies on the framing assumptions that allow the concept to be defined in the first place. The computational security provided by the RSA protocol is based on the assumption that the adversary has limited computational power. Specifically, RSA operates under the assumption that factoring large semiprimes (products of two primes) is computationally intractable. While this assumption has been of substantial practical interest, it is always subject to drastic paradigm shifts. What seems intractable today with classical resources may not remain so with quantum hardware. Notably, Shor’s algorithm for factoring numbers is one of the most celebrated examples of quantum advantage. The advent of quantum hardware capable of factoring large numbers would compromise one of the most widely used security schemes — not by violating the initial assumption but by demonstrating that the framework itself originally overlooked an entire class of practical computational models.

We thus see two distinct types of security at play: One that is strictly stronger — unconditional or information-theoretic security — and another that depends on computational assumptions. Despite being sometimes impractical, the former can only be considered more desirable due to its stronger guarantees. However, this raises a question: Can something more be done to salvage information-theoretic security from Shannon’s pessimism? This is precisely what a number of researchers — including Wyner, Maurer, Ahlswede, Csiszár, and Körner — explored during the 1970s to 1990s, a period that coincided with the development of quantum information theory.

Exploiting Channel Asymmetry

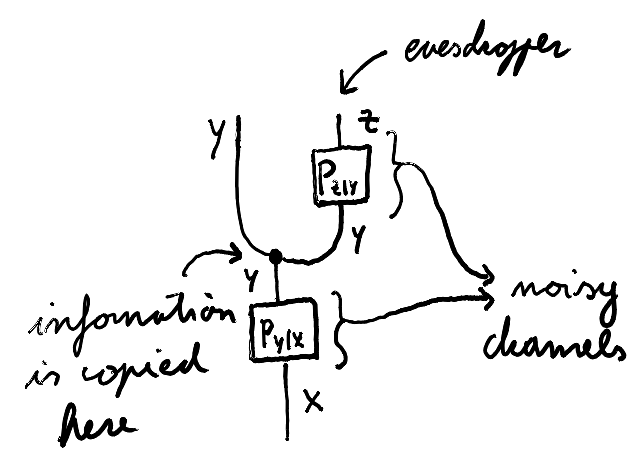

The seminal idea of Wyner is to consider the possibility of limiting the knowledge that an eavesdropper may have about the channel between Alice and Bob. He imagines a scenario where a potentially noisy channel copies the transmitted information, and this copy reaches Eve after passing through an even noisier device. This figure illustrates the causal structure of Wyner’s assumption:

Under this asymmetric setup, Wyner defines the secrecy capacity of the channel, which is the maximum rate at which Alice can reliably share information with Bob in such a way that Eve gains a negligible amount of information. The secrecy capacity, measured in bits per channel use, is shown by Wyner to be equal to:

\[C_s = \max_{P_X}\left[I(X;Y) - I(X;Z)\right]\]where $I(X;Y)$ is the mutual information between Alice and Bob, and $I(X;Z)$ is the mutual information between Alice and Eve (the malicious eavesdropper). The mutual information is a non-negative and symmetric measure of how much a joint probability distribution $P_{XY}(x,y)$ differs from the factorized distribution $P_X(x)P_Y(y)$ arising from its marginals. Formally, for discrete random variables $X$ and $Y$, the mutual information is defined as:

\[I(X; Y) = \sum_{x \in X} \sum_{y \in Y} P_{XY}(x, y) \log \left( \frac{P_{XY}(x, y)}{P_X(x)P_Y(y)} \right).\]Mutual information relates to Shannon entropy through the following relation:

\[I(X; Y) = H(X) + H(Y) - H(X, Y),\]or equivalently:

\[I(X; Y) = H(X) - H(X \mid Y) = H(Y) - H(Y \mid X),\]where $H(X)$ and $H(Y)$ are the marginal entropies of $X$ and $Y$, $H(X, Y)$ is the joint entropy of $X$ and $Y$, and $H(X \mid Y)$ and $H(Y \mid X)$ are the conditional entropies of $X$ given $Y$ and $Y$ given $X$, respectively.

The conditional entropy $H(X \mid Y)$ measures the uncertainty or randomness of a random variable $X$ given that another random variable $Y$ is known. It is defined as:

\[H(X\mid Y) = -\sum_{x \in X} \sum_{y \in Y} P_{XY}(x, y) \log P_{X|Y}(x|y),\]and can also be expressed in terms of the joint entropy $H(X, Y)$ and the marginal entropy $H(Y)$:

\[H(X\mid Y) = H(X, Y) - H(Y).\]Conditional entropy quantifies the remaining uncertainty in one variable after the other is known, and it plays a key role in defining mutual information and understanding the relationships between random variables. Mutual information, in turn, measures the reduction in uncertainty about one variable when the other is known, capturing the statistical dependence between $X$ and $Y$.

In the definition of secrecy per channel use, the maximum is taken over all probability distributions of the input $X$, representing the optimal strategy to exploit the channel’s asymmetry. Wyner’s result may appear intuitive, but it is remarkable in that it formalizes a concept that would later become a recurring theme, namely the idea that perfect secrecy can be distilled from the gap between Eve’s and Bob’s knowledge of Alice’s message.

A New Paradigm

Around the same period, a new approach to information theory was emerging — a final act of the great quantum revolution that began a hundred years ago today. Before Bell’s analysis of non-locality, the quantum revolution undoubtedly seemed strange, but it would have been difficult to argue that it represented a genuine new interface between Nature and the classical notion of information. After all, most physicists seemed to believe that the way information is understood by computer scientists is so inherently emergent — so distant from the fundamental grammar of reality — that there could not be an interaction to meaningfully integrate into the unitary dynamics of physics.

The radical innovation proposed, propelled by Bell’s discovery, was that the limitations and peculiarities of classical information processing could serve as a lens — a standard of comparison — to evaluate properties of the fundamental dynamics implied by quantum theory. (To be fair, this idea was already present in the works of Niels Bohr, though his writings and interpretation of quantum theory, often dismissed by the motto ‘shut up and calculate’, remain largely misunderstood today.) Bell’s theorem essentially states that, under the assumption of a specific causal structure, the correlations predicted by quantum theory cannot be replicated by any classical framework with the same causal structure.

This opens up an important perspective: If we understand quantum computation as encoding information in physical systems, allowing these systems to evolve, and then reading out the outcomes, correlations emerge that would be impossible under similar causal assumptions in classical settings. This consequence not only affects the notion of what is efficiently computable but also challenges the very foundations of information theory.

From No-cloning to BB84

The experimental demonstration of correlations violating Bell’s bounds of classicality was achieved in the 1980s by Alain Aspect. Two years later, the informational perspective on quantum theory began to take shape through fundamental results such as the No-cloning theorem — a particularly simple linear-algebraic result that nevertheless captures part of what appears to be the inherently analog nature of quantum information. Quantum information cannot be copied and is thus intrinsically tied to the physical system that represents it. This does not mean that quantum information on one physical support cannot be transferred to another, but rather that such a transfer cannot result in duplication.

The no-cloning principle was followed in 1984 by another milestone: the BB84 protocol, based on an earlier idea by Wiesner from 1980, which aimed to create unforgeable banknotes using the no-cloning principle. The quantum protocol, designed by Charles Bennett and Gilles Brassard, can be understood as an attempt to address the inherent challenges of information-theoretic security. Instead of assuming that the channel available to the eavesdropper is noisy, the protocol leverages the Heisenberg uncertainty principle — a quantitative manifestation of the contextual nature of quantum theory — to ensure that the gap in mutual knowledge essential for unconditional security is fundamentally embedded in the fabric of reality.

Quantum Theory and Information Security

It is worth recapping how we arrived at this point. The pessimism inherent in Shannon’s theory of secrecy systems fostered two parallel developments. On one side, it inspired the redefinition of new security protocols, such as RSA, which limit the computational power of the attacker. On the other, it provided the impetus for a re-evaluation of such pessimism. Wyner’s work showed that a route to near-perfect secrecy could lie in the sufficiently large gap between the quality of information transmitted to Bob and that achieved by Eve’s malicious interception. Quantum theory seems to push this further — suggesting that such assumptions about asymmetry may not be unwarranted but are instead rooted in the fundamental laws of physics.

In his later work, Ueli Maurer did not hide his skepticism towards both of these developments. His laconic comment on Bennett’s and Brassard’s protocol leaves little room for debate: “[it] is based on the (unproven but plausible) uncertainty principle.” And how can we blame him? For a cryptographer, the uncertainty principle might feel no different from an unproven statement about the complexity of a computational task. The uncertainty principle can indeed be proven, but only within the framework of quantum mechanics. The malicious agent must strictly adhere to the bounds imposed by quantum mechanics, and the physical systems used to implement the protocol need to be certified.

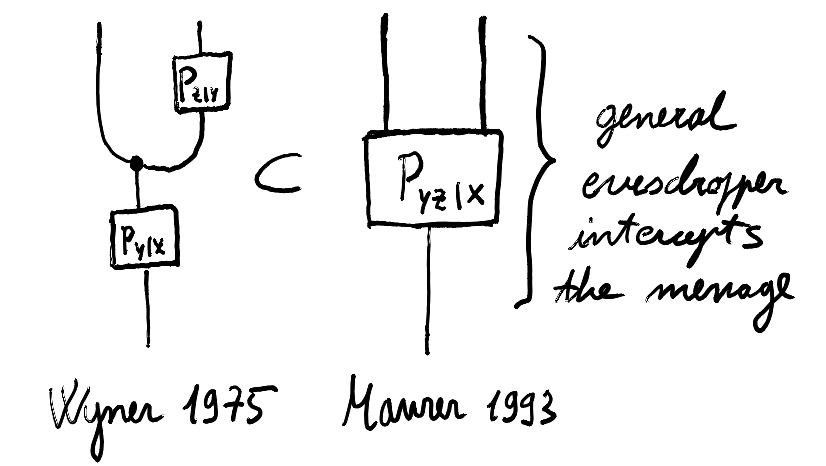

From this point of view, skepticism about these quantum protocols is understandable, but Maurer also criticized Wyner’s assumption as being unreasonable. There is no reason to believe that the channel to the eavesdropper would be noisier than the one shared between Alice and Bob. Maurer generalized this setting by considering an arbitrary broadcast channel, allowing for the possibility that Eve’s attack is described by an arbitrary probability distribution $P_{XYZ}$ connecting the three random variables. Contrast this with the causal structure of the wire-tap channel assumed by Wyner shown above:

Maurer extended Wyner’s work by decoupling secrecy from specific channel structures, instead leveraging any correlated information combined with public discussion. This shifted the focus from physical-layer limitations to information-theoretic techniques, making secret key agreement possible in diverse settings beyond the wiretap channel.

By allowing public interactive discussion between the parties, Maurer generalized Wyner’s secret capacity by defining the notion of secret key rate. The flexibility of this concept makes it applicable to cases where the degraded channel assumption is removed. The framework for secret key extraction that results from Maurer’s work accommodates general interactive protocols and arbitrary correlations. The distillation of a secret shared key is possible as long as the conditional mutual information $I(X; Y \mid Z)$ is bounded away from zero. This quantity can be expressed in terms of conditional entropies:

\[I(X; Y \mid Z) = H(X \mid Z) - H(X \mid Y, Z),\]QKD protocols fall into this category, with the peculiarity that it is the specific belief that the parties share a specific quantum state that allows the conditional mutual information to be bounded away from zero — independently of how the quantum state is extended.

Device-independent Key Distribution

In a dialectical progression, the understanding of where secrecy originates has evolved from Shannon’s classical framework to quantum key distribution (QKD). While classical cryptography laid the groundwork for studying the quantitative advantages introduced by quantum mechanics, the quantum framework suggests that the assumption that the parties share a specific quantum state — combined with principles like no-cloning and Heisenberg’s uncertainty — fundamentally limits the information an eavesdropper can obtain. These principles, as highlighted by Bell’s theorem, reveal constraints on how classical agents can interact with quantum systems.

It should be possible to present, if not quantitatively then at least qualitatively, the principles enabling QKD in a theory-independent way. What is the source of the fundamental randomness at the core of these protocols? Clearly, the security guarantee is weakened by the necessity to assume that the state shared between the agents is a given quantum state. Eve — similarly to how Shor’s algorithm broke the hope of computational security of RSA — could have tampered with the devices, letting you think that the specific quantum description is valid while, under the hood, something very different is simulating the expected ‘external behavior’ of the device.

As brilliantly pointed out by the philosopher Itamar Pitowsky in his refreshing account of Bell’s inequality in George Boole’s ‘Conditions of Possible Experience’ and the Quantum Puzzle, we meet difficulties when trying to understand what is so revolutionary about quantum mechanics. The difference between quantum and classical physics is usually described using quantum mechanical jargon, but there must be a way to describe the phenomena that is independent of the very theory constructed to reconcile it with the rest of observation. Just as Maurer established a framework to study secrecy in classical communication protocols, there is now a need to explore how randomness extraction intertwines with more general theories of information processing — theories that include quantum mechanics but extend beyond it, without requiring belief in the specific mechanisms enabled by quantum mathematics. Understanding how QKD secrecy differs from other paradigms in a way that is independent of the theory itself is essential.

As Maurer answered the question, ‘Where does secrecy come from?’ by appealing to conditional mutual information, recent advances in QKD — particularly in the device-independent approach — reveal that additional private secrecy is enabled by non-locality. The device-independent study of how secret correlations can be shared among multiple parties hints at an intriguing possibility: The way classical information theory has been understood so far may be too restrictive. An extension of information theory — one that incorporates contextuality in how information is manipulated — seems to vastly expand the landscape, offering a new way to understand security which generalises the classical framework rather then be seen as just imposing additional theory dependent assumptions, weaving together the pieces of this multicolored tapestry.