Here is the second part of our dialogues, inspired by transcripts of meetings held at NeverLocal by me and our communications specialist, Ionut Gaucan. The aim here is to introduce the main ideas behind the first quantum key distribution protocol that uses entanglement to establish secrecy: E91, introduced by Arthur Ekert in 1991. You can probably guess the pattern. You may think that too much attention is devoted to principles and too little to delving into the technicalities of the protocol. That is, until you realise that the cryptographic protocols under scrutiny are closely aligned with the foundational concepts we have been uncovering in this series of blog posts. It is therefore of paramount importance to introduce them and digest them first.

Nicola: Last time we spoke about the standard, fairly simple protocol called BB84, a key-distribution scheme that lets Alice and Bob share random binary strings.

Let us briefly reiterate. Alice takes a quantum system, a qubit, and encodes a locally generated random bit into one of four states: (0), (1), (+), or (−). The states (0) and (1) are the computational-basis states; (+) and (−) form a different basis that is maximally incompatible with the computational one. In our previous discussion I described these as different “perspectives”, “contexts”. Choosing a basis is like choosing a viewpoint from which to interrogate the same system.

So, Alice generates a random bit and encodes it by choosing one of the two bases and then the state representing 0 or 1 within that basis. There are, in effect, two ways to prepare and measure; many more exist in general, but with qubits two complementary bases already show the key idea.

Alice sends the physical system to Bob. Bob does not know which basis Alice used, so he chooses his measurement basis at random: either the computational basis or the (+/−) basis (orthogonal to the computational basis). At this stage, Alice has a random string (from her local randomness), and Bob has a random string (from the intrinsic randomness of quantum measurement). There is not yet the correlation they want, because sometimes Alice and Bob used incompatible bases.

After many rounds, they publicly announce which bases they used (not the outcomes). They then sift the data: they keep only the rounds where their bases matched and discard the rest. Those kept outcomes can be strongly correlated. One often says (0) and (1) are orthogonal (perfectly distinguishable) states, while (+) and (−) are orthogonal superpositions of (0) and (1) (phases matter in the maths, but that detail is not essential here).

The essential point is complementarity: you can choose two ways of observing the same system such that measuring in one way gives you no information about what you would have learned in the other. This guarantees that a malicious interceptor would break the maximal correlations that should be observed when both agents make the same choice of measurement.

Is that clear?

Ionut: So the basis mismatch is exactly what reveals an eavesdropper?

Nicola: Yes. Disturbance shows up as a reduction of the correlations in the matched-basis subset.

Ionut: Yes, in broad terms I think I get it.

Nicola: As I was explaining last time, this can be detected by making part of the key public.

Classical properties vs Contextual access

Nicola: This already shows a basic difference between classical and quantum systems. In classical physics, a system is described by definite properties: colour, size, shape, or, in mechanics, position and momentum. In quantum theory, objects do not have predefined properties; they gain definite values only upon measurement, or, more precisely, in virtue of our choice to measure them.

And when you measure one observable precisely, you forfeit information about a complementary one. That is the content of the Heisenberg uncertainty principle.

Our discussion is a discrete version of that story: with qubits, measurement outcomes are 0 or 1 rather than continuous values.

Ionut: So “property” in the classical sense means stability under interrogation?

Nicola: This is a very good question, one that is impossible to answer in a fully satisfactory way. It is, however, possible to make intuitive sense of it. Classicality, in some sense, is what remains stable under our interaction with nature. Imagine observing the world by laying down a net, a loose-threaded tapestry. The reality that filters through the loose threads, the mesh, is, of course, mesh-shaped itself: we lose the ability to perceive all the details that would form a seamless reality.

Using a different net, a different mesh, reveals facts compatible with this new perspective. We can try to fill in the invisible spots, to reconstruct continuity by introducing “hidden patterns”, “hidden variables”, and for any single net this works fine. It is when we use a different net that the structure we postulated for one becomes incompatible with what is shown through the mesh of the other.

So what, then, is classicality? It is impossible to answer definitively, though many physicists almost obsessively try, rather hopelessly in my opinion. Providing a description of the various pieces of the natural world that can be juxtaposed in ways compatible with the net’s “negative space” seems difficult. Not many people have taken the discussion about “classicality” seriously in the study of quantum foundations.

The nets themselves, however, the logical structures that at any time form the context of all the possible coexisting, commensurable properties, have a very specific structure. But it is a logical structure, a formal structure, one that is difficult to anchor to specific elements of what we call the real.

Ionut: Why are we talking about the foundational aspect of the theory? Cryptography is about a very concrete task; are we not muddying the waters?

Nicola: You are absolutely right to be sceptical; however, the strangeness of quantum mechanics has important empirical consequences. There are two options at this point. We can mystify everything in the quantum formalism, embrace it, and shut everything down by quoting Feynman: “Nobody understands quantum mechanics.”

I am more interested in the alternative of understanding quantum phenomena by explaining and discovering patterns using only the empirical, probabilistic part of the theory, by trying to make use of stable, verifiable aspects of the correlations obtained by the theory to give meaning to many of its counterintuitive aspects.

Cryptography is, in this regard, one of the most important places where we can put this idea about foundations into practice. Explaining quantum mysteries from this theory-independent perspective becomes exactly what is needed to understand how correlations and data can guarantee the type of security that quantum cryptography promises.

Ionut: I think I have an intuition about the difference between quantum and classical information at this stage. We still need to talk about entanglement, though.

Correlation and Entanglement

Nicola: Exactly. Let’s move to today’s topic: entanglement, which empowers most current cryptographic protocols. It is sometimes misunderstood as either “just correlation” or as “magical faster-than-light communication”. It is neither. It is a kind of non-classical correlation. It does not enable superluminal signalling, but it goes beyond classical explanations.

Often described as spooky action at a distance, it is not more spooky than the phenomenon that underlies all of quantum mysteries, the very subject of our previous discussion on BB84.

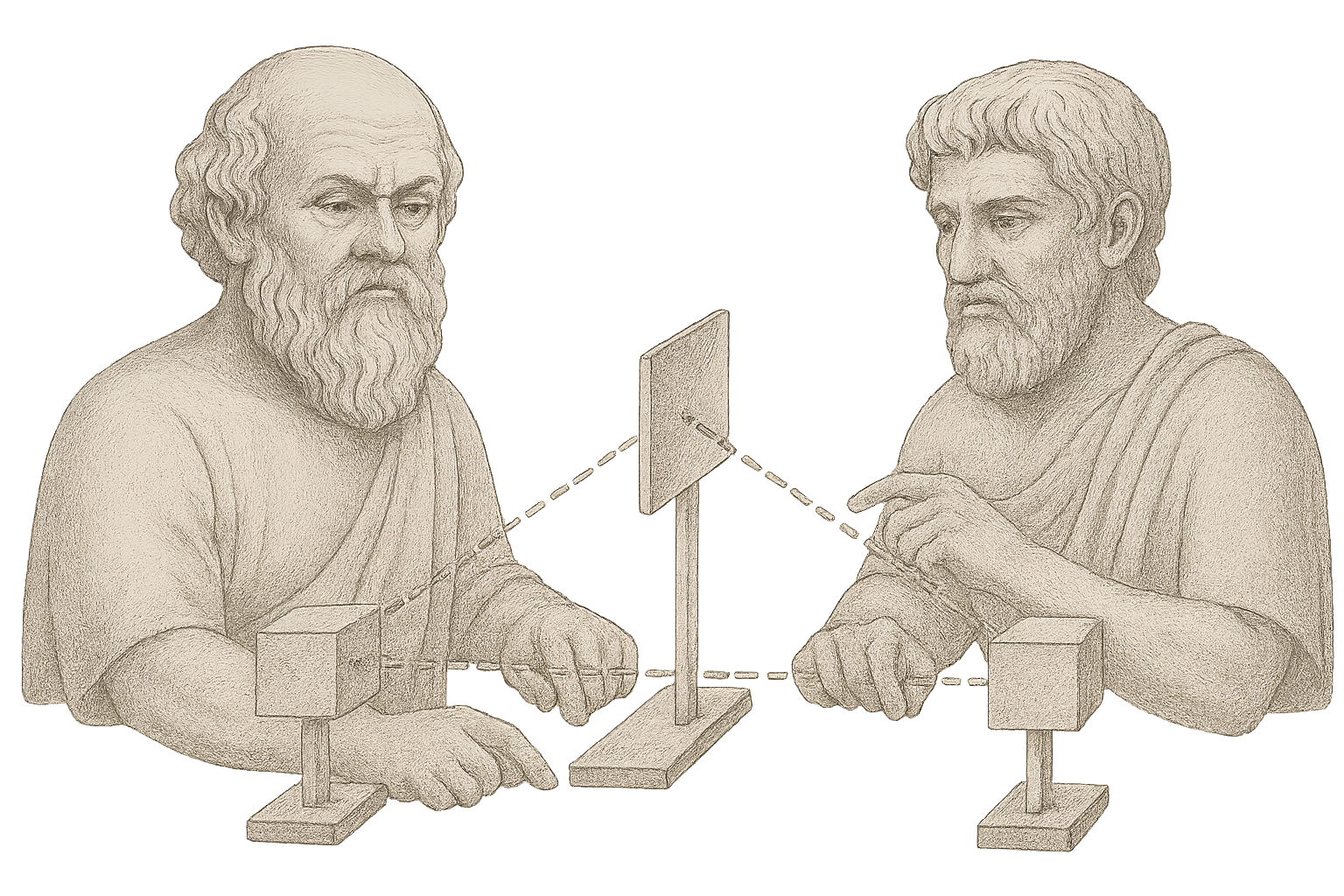

A classical analogy helps. Imagine I tear a playing card in half, seal each half in an envelope, and give one to Alice and one to Bob. The card could be red or black. If Alice opens her envelope and sees “red”, she immediately knows Bob’s half is “red” as well. That is a classical correlated preparation.

Last time I spoke about the fact that quantum systems are not defined by properties in the classical sense; rather, it is better to think in terms of the different ways we can access them, how we encode and decode classical information. We also saw that this process is not as innocent as it seems: it entails making choices that sometimes completely erase what could have been learned about a complementary observable.

Ionut: If properties are not predetermined, I suspect there will be problems in defining them as “being correlated”.

Nicola: Right, so how does this reflect on the correlation side? If quantum systems are not defined by definite properties and must be described operationally, what is the counterpart of being “correlated” in the quantum world? Suddenly this operational characterisation gives us more room: a larger set of potentially compatible behaviours. Let us single out two perspectives, two observables, for each subsystem, called $a_0$ and $a_1$ for subsystem $A$, and $b_0$ and $b_1$ for subsystem $B$. Then, keeping the operational view in mind, the possible outcomes, including the correlations, form a table of probabilities:

| (x,y) \ (a,b) | (+,+) | (+,−) | (−,+) | (−,−) |

|---|---|---|---|---|

| (0,0) | 7,3% | 42,7% | 42,7% | 7,3% |

| (0,1) | 7,3% | 42,7% | 42,7% | 7,3% |

| (1,0) | 7,3% | 42,7% | 42,7% | 7,3% |

| (1,1) | 42,7% | 7,3% | 7,3% | 42,7% |

Ionut: So we have classical and quantum behaviours that can be described using these conditional-probability tables?

Nicola: Yes, here is an entirely classical behaviour, not very dissimilar from the previous example:

| (x,y) \ (a,b) | (+,+) | (+,−) | (−,+) | (−,−) |

|---|---|---|---|---|

| (0,0) | 12,5% | 37,5% | 37,5% | 12,5% |

| (0,1) | 12,5% | 37,5% | 37,5% | 12,5% |

| (1,0) | 12,5% | 37,5% | 37,5% | 12,5% |

| (1,1) | 37,5% | 12,5% | 12,5% | 37,5% |

So what unites these two behaviours? Evidently, many things. A subtle and important aspect is witnessed by the no-signalling property. In both cases, if we consider the marginal probabilities, (the single-party distributions obtained by summing the joint table over the other party’s outcomes) describing the individual local behaviours and ignore the correlations, they are independent of the choice of measurement performed on the other subsystem.

What they do not have in common is this: one of the two tables can be obtained as a statistical mixture of deterministic behaviours, while the other cannot. Only one can be explained by an underlying deterministic mechanism obscured by randomness. In the classical case this counterfactual mechanism always arises from properties that exist prior to and independent of measurement, merely revealed at the moment of measurement.

In quantum mechanics, by contrast, we can prepare systems where certain properties do not have predetermined values before measurement, and yet measurement results are correlated. That is entanglement in a nutshell: correlations without pre-existing local values.

Ionut: So where does this leave cryptography? How do these correlations become a key?

Nicola: Well, the entangled, non-classical behaviour we showed earlier necessitates certain patterns of correlation between the measurement outcomes. These establish the shared key.

To sharpen the classical comparison, take cards with two attributes: colour (red/black) and suit (hearts, diamonds, clubs, spades). Pretend Alice and Bob can choose to “measure” either colour or suit. To keep it binary, group the suits into two classes (say, diamonds+spades vs hearts+clubs). If Alice and Bob always check the same attribute (both colour or both suit), their outcomes are perfectly correlated because they hold halves of the same card. If they check different attributes, the results need not be correlated in any systematic way. This is a classical system that already yields a family of conditional probabilities depending on the chosen “measurement”, but it cannot reproduce certain quantum correlations. That gap, what quantum correlations can do that classical ones cannot, is what entanglement reveals and what Bell’s theorem formalises.

Entanglement to certify security: Eckert 1991

Ionut: How is entanglement used for cryptographic protocols?

Nicola:Imagine Eve prepares a long sequence of cards at random, each independently red or black with probability one-half, tears each card in two, and distributes matching halves to Alice and Bob. If they later compare notes in order, they find perfect correlations on the pairs where they “measured” the same property, colour in this case. Over many trials, the joint outcomes concentrate on the perfectly correlated pairs (red-red and black-black), each with probability one-half. Eve will also share the same information.

So in these classical cases the correlations possessed by the two agents can be effectively shared with Eve as well. The correaltions made possible by entanglement prevent the observed correlations from having a similar totally classical source, a source that can be copied and broadcast by an eavesdropper.

Arthur Ekert in 1991 proposed a protocol now known as E91. Instead of single systems sent from Alice to Bob, there is a source of entangled pairs. One particle from each pair goes to Alice and the other to Bob. The source need not be trusted. On every round, each party chooses a measurement setting at random. When the settings are effectively aligned, the outcomes are strongly correlated; those data are sifted to form the raw key. When the settings are deliberately misaligned, the data are used as a test sample. From that sample, Alice and Bob estimate whether the observed pattern of correlations exceeds a classical bound. If it does not, the data admit a classical explanation and the run is discarded. If it does, the experiment itself serves as a verification that the correlations arise from entanglement rather than from pre-existing local values.

Ionut: So the test is the part that rules out hidden variables?

Nicola: After verification, they reconcile small discrepancies due to noise and then apply privacy amplification to reduce any information potentially available to an eavesdropper. The amount of compression is chosen according to the strength of the test. In this way, verification precedes trust. The protocol does not rely on secrecy of the devices or on assumptions about the source; it relies on the observed structure of correlations. This is the practical link between E91 and entanglement verification: the same experiment that generates correlated bits also checks that those bits could not have been produced by a classical hidden-variable model.

Ionut: To conclude, can we synthesise by saying that Ekert’s protocol, by certifying non-locality, also certifies the secrecy of some of the correlated outcomes; is that correct?

Nicola: This is an interesting point. Ekert’s protocol is not dissimilar to modern device-independent quantum key distribution (DIQKD, for short). What prevents it from being DIQKD is the nature of its security proof. Security is not guaranteed by the correlations themselves but by the knowledge that certain correlations must be produced by particular kinds of quantum resources. It therefore depends on the assumption that the devices behave in a certain way, at the very least, that they probe a quantum system of a particular “dimensionality,” of a certain size. As such, it cannot be said to be fully device-independent. To prove device-independent security, the relationship between an eavesdropper’s maximal knowledge and contextual/non-local correlations has to be established in a fully device-independent framework. We have already hinted at the reason, but many of the pieces still need to find their correct place in the narrative.

The problem here is similar to saying that no-cloning guarantees security. That is certainly true in principle, but it relies on the belief that the protocol probes a certain type of quantum system. It is a very different matter to prove, from correlations, that something contextual is happening and therefore that a non-classical description is a logical necessity which, as a consequence, would satisfy a form of no-cloning. We can even bypass no-cloning altogether and describe its embodiment in the purest empirical way, through the principle of the monogamy of non-locality. But this is a great deal of material, enough for our next discussion.